KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2025 Vol. 24Development mapping car for urban mapping

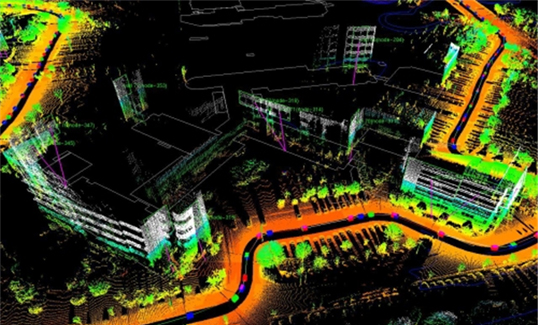

An urban mapping system can create an accurate 3D map through sensor fusions and a visual-SLAM algorithm.

Article | Fall 2016

Researchers have worked for years to find ways to build more accurate maps. As an autonomous cars are beginning to appear on the road, the ability to obtain accurate digital maps for autonomous cars is becoming increasingly important. A team led by Prof. Ayoung Kim from the Department of Civil and Environmental Engineering is working toward these accurate maps for urban areas. The main issue with accurate map building in urban areas is the limited sensor availability. One popular sensor is GPS, which is inexpensive but only provides accuracy of a meter or so. One can adopt more accurate GPS with higher cost, but this is also not ideal. Urban areas are often full of buildings, impeding signals from even expensive GPS sensors. This is a well-known problem in urban mapping known as “GPS black out in urban canyons”.

An alternative solution, called SLAM (Simultaneous Localization and Mapping), was found by robotics researchers. SLAM tackles the mapping issue by focusing on two aspects: localization and mapping. Localization is the problem of estimating the pose of a robot relative to a map while mapping is the problem of integrating the information to understand the environment. Fusion of information gathered from multiple sources is essential to build a comprehensive map for autonomous vehicles. Our solution is to provide a mobile sensor platform using the abovementioned SLAM and sensor fusion algorithm.

Funded by NAVER Corporation, Prof. Ayoung Kim’s team built a sensor system configured with four cameras, two 3D-LiDARs, and a control box mounted on the roof of the vehicle. Two rear cameras and a 3D-LiDAR are fused by the extrinsic calibration method. The proposed system can generate accurate maps with numerous 3D point clouds and corresponding images. Also, front cameras can be used as a mono or a stereo camera that can generate a lane, topological, and 3D point cloud map using versatile visual SLAM algorithms. The control box not only protects cables but also controls all of the sensors by ATmega128. The entire system is protected from the rain with an International Protection grade box and a 3D-LiDAR (VLP16).

* This research was funded by NAVER Corporation.

Most Popular

When and why do graph neural networks become powerful?

Read more

Smart Warnings: LLM-enabled personalized driver assistance

Read more

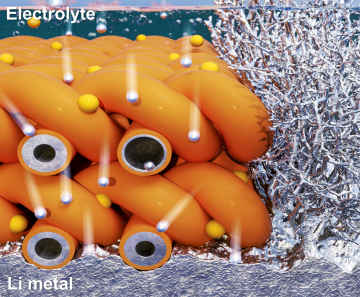

Extending the lifespan of next-generation lithium metal batteries with water

Read more

Professor Ki-Uk Kyung’s research team develops soft shape-morphing actuator capable of rapid 3D transformations

Read more

Oxynizer: Non-electric oxygen generator for developing countries

Read more