KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2025 Vol. 24In this study, the authors propose a new theory elucidating when and why graph neural networks become powerful. They demonstrate that the dependence between connections and features determines the effect of graph neural networks.

Imagine a community where people are connected to each other, forming a network. In the network, each individual (node) has unique features, and these features often influence the connections. People tend to make friends by their gender (a feature) but not by their height (another feature). However, interestingly, people are still connected with those having similar heights because gender biases height in connection formation. Features and connections have complex interactions.

Graph neural networks (GNNs) are the leading deep learning techniques for analyzing such networks. Due to their black-box nature, however, elucidating when and why GNNs become powerful is a technical challenge. Particularly, how the relationship between features and connections affects GNNs has been unclear. A recent study titled "Feature Distribution on Graph Topology Mediates the Effect of Graph Convolution: Homophily Perspective" aimed to address this gap.

The first challenge was measuring the complex relationship between features and connections. For instance, given gender and height as features in a social network, the measure should assign a high score for gender and a low score for height. The researchers, thus, defined class-controlled feature homophily (CFH). CFH measures the influence that node-pair feature similarity has on connection formation, after controlling for a potential confounding variable.

The next challenge was mathematical analysis of GNNs in relation to CFH. In the previous analytic frameworks, CFH heavily covaried with other important network statistics, obscuring the effect of CFH on GNNs. Thus, the researchers introduced a new mathematical framework that specifically controls CFH in GNN analysis. This key technical innovation allowed GNN behavior analysis at different CFH levels for the first time.

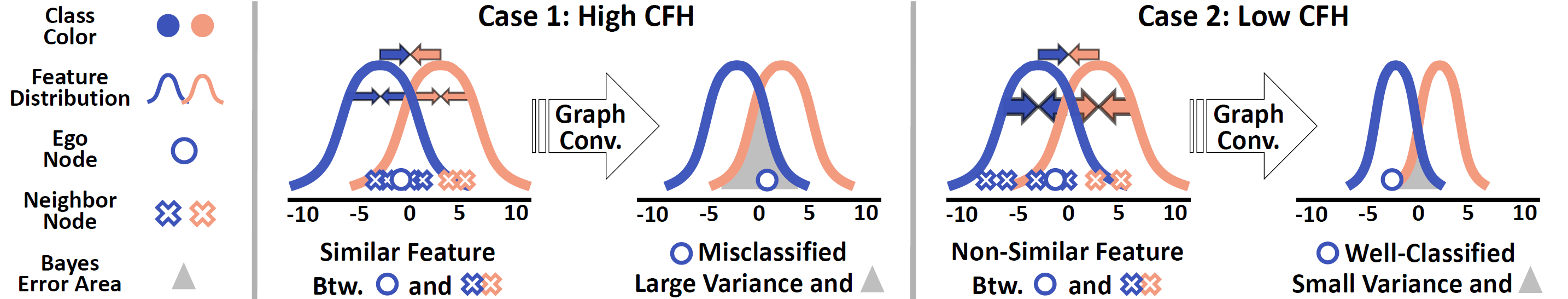

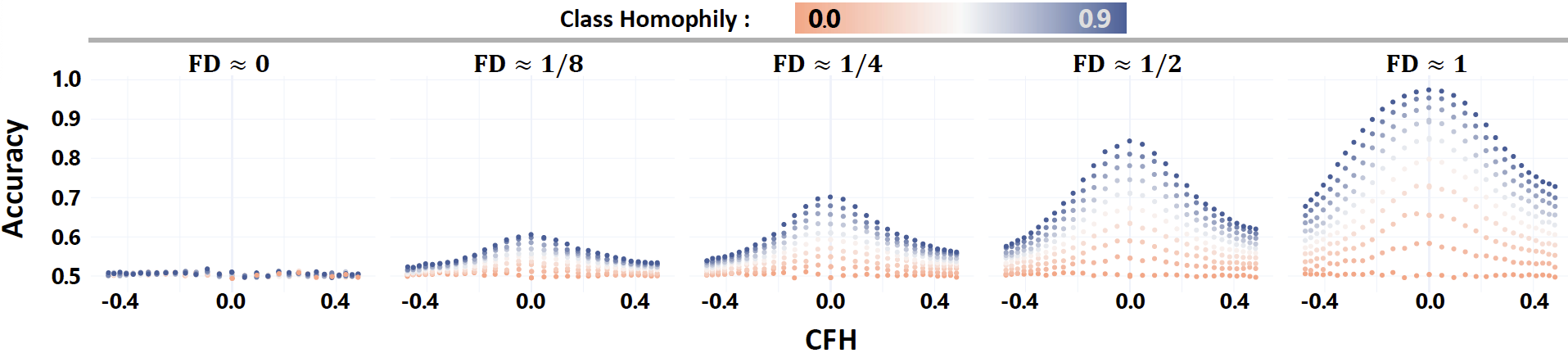

The mathematical analysis revealed that CHF determines GNN’s power. In particular, the researchers found that CFH moderates GNNs’ capacity to pull each feature toward the feature distribution mean (Figure 1). In fact, the smaller the CFH, the more similar the same-class node features, leading to GNN predicting the node class better (Figure 2).

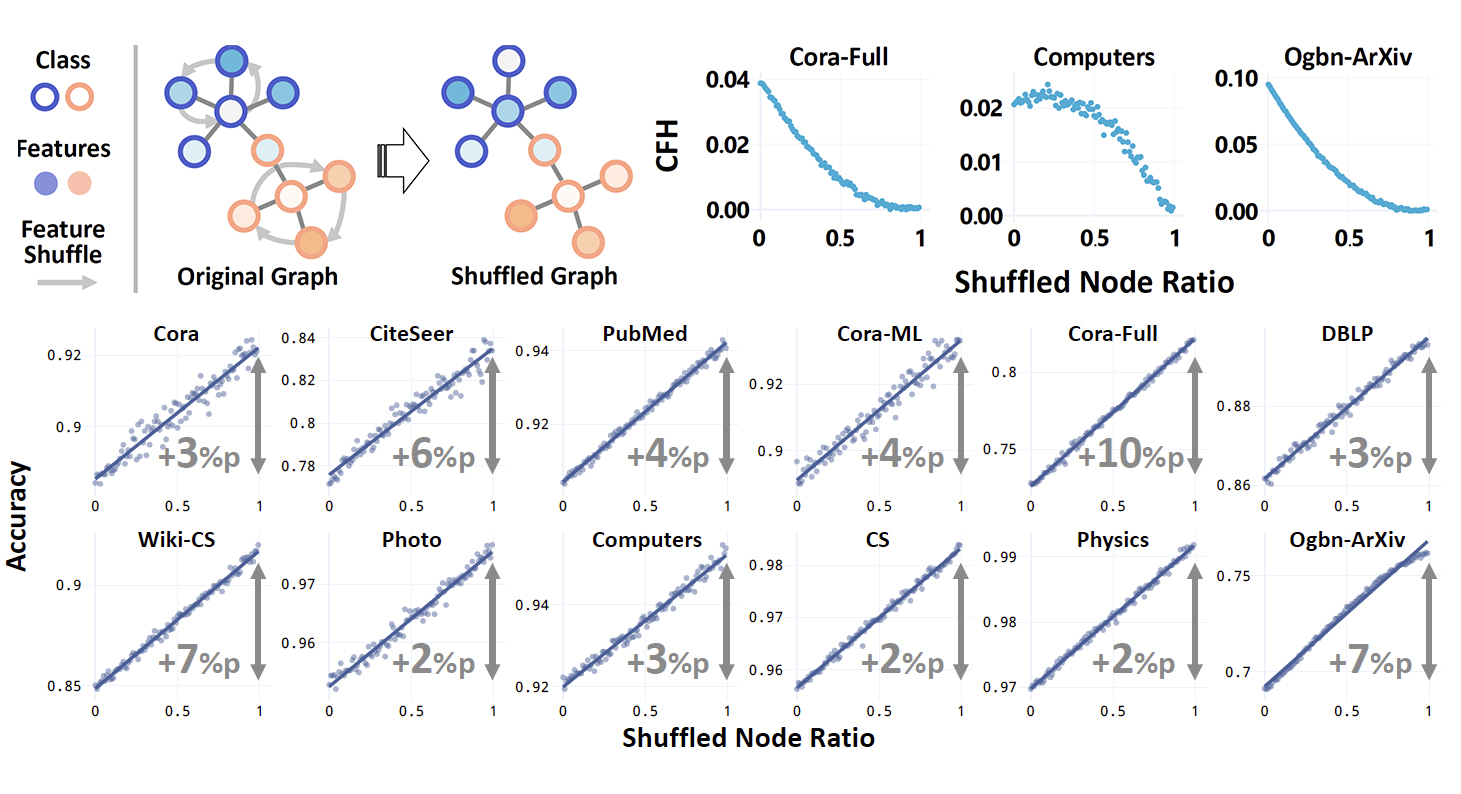

The theory also held empirically. The researchers used 24 network benchmark datasets, where they randomly shuffled node features to lower CFH of each dataset. Consistent with their theory, shuffling node features (i.e., lowering CFH) led to better GNN performance (Figure 3). Therefore, the authors concluded that CFH explains when and why GNNs become powerful.

Understanding the working mechanism of deep learning models is a pivotal foundation for GNN research, which is especially challenging due to their black-box nature. GNNs are increasingly becoming popular and applied in industry. With a new GNN theory, the present work may open up new directions to catalyze GNN research and applications.

This study, with Mr. Soo Yong Lee as the first author, was presented at the International Conference on Machine Learning 2024 under the title “Feature Distribution on Graph Topology Mediates the Effect of Graph Convolution: Homophily Perspective.”

Most Popular

When and why do graph neural networks become powerful?

Read more

Smart Warnings: LLM-enabled personalized driver assistance

Read more

Extending the lifespan of next-generation lithium metal batteries with water

Read more

Professor Ki-Uk Kyung’s research team develops soft shape-morphing actuator capable of rapid 3D transformations

Read more

Oxynizer: Non-electric oxygen generator for developing countries

Read more