KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2025 Vol. 24UbiTap: Leveraging acoustic dispersion for ubiquitous touch interface on solid surfaces

UbiTap: Leveraging acoustic dispersion for ubiquitous touch interface on solid surfaces

A novel sound-based touch localization technology offers a new means of ubiquitous interaction.

Article | Spring 2019

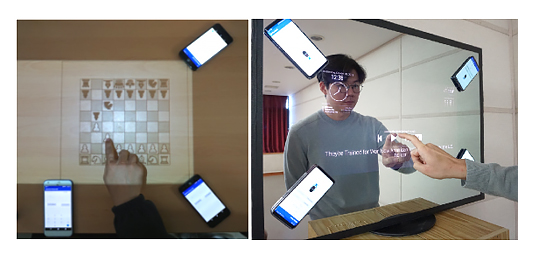

Professor Insik Shin (a professor at the School of Computing, KAIST) and his research team have developed UbiTap, a novel sound-based touch localization technology that offers a new means of ubiquitous interaction, making it possible to use surrounding objects, such as furniture or mirrors, as touch input tools. For example, by using only the built-in microphones of smartphones or tablets, users can turn surrounding tables or walls into virtual keyboards and write lengthy e-mails with greatly enhanced convenience. Also, family members can make a virtual chessboard on their dining tables and enjoy a board game after having a dinner. In addition, we can use traditional smart devices (such as smart TVs and mirrors) in a smarter way, by using the microphones present in the devices. If children can play a game of catching germs in their mouth while brushing their teeth in front of smart mirrors, it may help to develop proper brushing habits (see the figure below).

The most important thing for enabling the sound-based touch input method is to identify the location of touch inputs very precisely (within about 1 cm error). However, it is challenging to meet the requirement, mainly because this technology can be used in diverse and dynamically-changing environments. People may use different objects (such as desks, walls, or mirrors) as touch input tools, and the surrounding environments (such as the location of nearby objects or ambient noise level) can be varied. These environmental changes can affect the characteristics of touch sounds.

To address this challenge, the research team has focused on analyzing the fundamental properties of touch sounds, especially how they are transmitted through solid surfaces. On solid surfaces, sound undergoes a dispersion phenomenon that makes different frequency components travel at different speeds. Based on this phenomenon, we observed that 1) the arrival time difference (TDoA) between frequency components increases in proportion to the sound transmission distance and 2) this linear relationship is not affected by the variations of surround environments.

Considering these observations, the research team proposed a novel sound-based touch input technology that 1) records touch sounds transmitted through solid surfaces, 2) conducts a simple calibration process to identify the relationship between TDoA and sound transmission distance, and 3) finally achieves an accurate touch input localization.

As a result of measuring the accuracy of the proposed system, the average localization error was lower than about 0.4 cm on a 17-inch touch screen. In particular, it provided a measurement error of less than 1 cm, even in a variety of objects such as wooden desks, glass mirrors, and acrylic boards and even when the position of nearby objects and noise levels changed dynamically. Experiments with real-world users have also shown positive responses to all measurement factors, including user experience and accuracy.

UbiTap is expected to encourage the emergence of creative and useful applications which provide rich user experiences. In recognition of this contribution, this work was presented at ACM SenSys, a top-tier conference in the field of mobile computing and sensing, in November this year, and it was awarded a best paper runner-up.

Demonstration video (http://cps.kaist.ac.kr/research/ubitap/ubitap_demo.mp4)

Most Popular

When and why do graph neural networks become powerful?

Read more

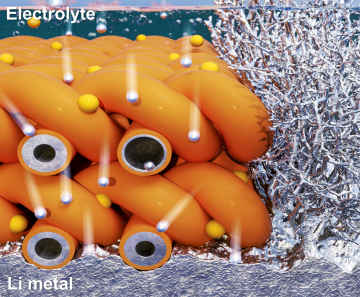

Extending the lifespan of next-generation lithium metal batteries with water

Read more

Professor Ki-Uk Kyung’s research team develops soft shape-morphing actuator capable of rapid 3D transformations

Read more

Smart Warnings: LLM-enabled personalized driver assistance

Read more

Development of a nanoparticle supercrystal fabrication method using linker-mediated covalent bonding reactions

Read more